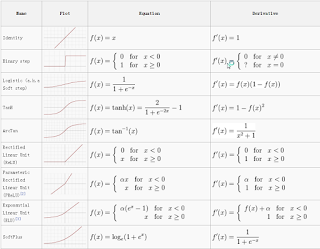

Here are some activation function that i found and think it is pretty useful. To understand better, lets take a look at this classification problem from tensorflow :- model = keras . Sequential ([ keras . layers . Flatten ( input_shape =( 28 , 28 )), keras . layers . Dense ( 128 , activation = tf . nn . relu ), keras . layers . Dense ( 10 , activation = tf . nn . softmax ) ]) We're building a sequential model, CNN. a) Flatten -> will convert 28 x 28 to a 784 pixel. b) Dense - relu activation function - which is a fully connected 128 layers. c) Softmax - configure to return probability of 10 different possibility. 10 possible outcome with highest probability. Something like this :- array([1.0268966e-05, 4.5652584e-07, 2.2796411e-07, 2.6025206e-09, 4.2522177e-07, 4.1701975e-03, 1.1740666e-05, 2.8226489e-02, 4.3704877e-06, 9.6757573e-01], dtype=float32) Normally you will take the high value to get pr...