using variable and secrets in gitub calling workflow

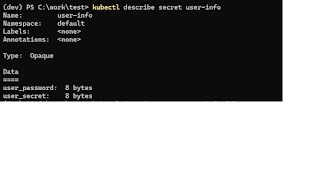

Accessing variables If you defined your variables via Github-> Settings-> Secret and Variable -> Variable, then you can reference it using ${{ vars.my_variable }}. Pretty straight forward. Accessing secrets Accessing secret is abit tricky for calling workflow. You need to declare the secrets to be used, like what is shown below 1. add secrets before calling the workflow build-image : uses : mitzen/dotnet-gaction/.github/workflows/buildimage.yaml@main needs : build-project with : artifact : build-artifact repository : kepung tags : "dotnetaction" secrets : docker_username : ${{ secrets.DOCKERUSER}} docker_token : ${{ secrets.DOCKERUSER}} 2. Next, add the secret as parameter in the calling workflow and use it on : workflow_call : secrets : docker_username : required : true docker_token : required : true inputs : artifact : required : true type : s